The Role of AGI in Cybernetic Immortality

Ben Goertzel, Ph.D.

Page 5 of 7

In practice, you can think about an AGI system as one that has the capacity to reflect on itself, to creatively learn and adapt. There's been distressingly little research in this area, in the AGI field. I think because it's more difficult, pragmatically than narrow AI research; and more difficult mathematically that totally general AI research. It's just hard. Yet of course, it's the most interesting thing in the long run.

I've been harping on the term "general intelligence," but there are also some other related terms. Actually it's been interesting to see that in the last few years there've been a number of workshops on the topic of the “human level intelligence,” within mainstream AI conferences. I don't like the term "human level AI" very much because I think it sets the goal too low. I don't think humans are that intelligent in the scope of all possible minds. We should be setting our sights much higher than that. I also think "human level" is kind of ambiguous. What does it really mean? I understand what human-like means, but "human level" for a radically non-human intelligence is kind of poorly defined.

My colleague, Bruce Klein [1], this past May, helped me to organize a workshop on artificial general intelligence, which brought together various people from the futurist community together with a number of AI researchers from industry and academia. I think it was probably the first large scale collision between academic AI guys and radical futurists. Stan Franklin [2] was there – he’s fairly well known, from the University of Memphis; Sam Adams [3], leader of IBM's Joshua Blue project; and a number of other academic and industry AI researchers. They were fairly conservative guys with – and it was interesting to see them put together with but Eliezer Yudkowsky [4] and Hugo de Garis [5] and a bunch of the more outspoken visionaries in the AI futurist community. It was interesting how small the gap was between these various guys actually – many of the academic AI guys really had more of an interest in general intelligence and superhuman AI than they commonly liked to admit within the academic context.

There's also an edited volume which I’m co-editor of which gathers together a number of papers on general intelligence. It’s called Artificial General Intelligence, published by Springer.

Image 5: Artificial General Intelligence Report

In 2007 IOS Press will publish the Proceedings of the AGI workshop.

Nick Cassimatis edited an issue of AI Magazine on the topic of human level intelligence recently. The field is building up a little bit of momentum. I have a feeling that somewhere within the next 5 to 15 years, to be conservative, you're going to see a renaissance of AGI, or human level intelligence research within the AI community.

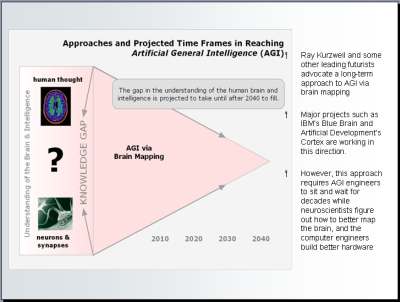

Image 6 -- [Click image above for larger view]

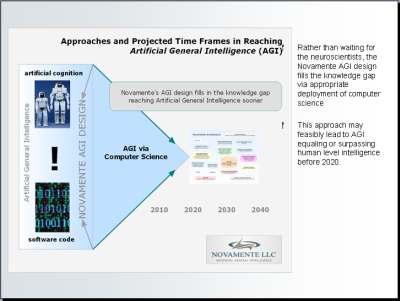

Image 7 -- [Click image above for larger view]

I can see it building up because 5 years ago there were no workshops, special sessions, or anything on this kind of topic within mainstream AI conferences. Now at least there's some little corner being carved out for AGI. Out of 2,000 people at an AI conference, now there are at least 50 people who are interested in talking about human level general intelligence, which is progress.

All it's going to take is one exciting announcement – one announcement that someone has achieved some reasonably moderate level breakthrough in AGI. Then people will jump all over it, and the field will really explode.

Footnotes

[1]. Bruce Klein - born April 11, 1974, is the President of Novamente LLC, a privately held AI software company focused on Artificial General Intelligence (AGI). He also directs the non-profit Artificial General Intelligence Research Institute (AGIRI) and helped organize the first AGI Workshop May 2006. http://www.answers.com/topic/bruce-klein February 8, 2007 2:25 pm EST

[2]. Stan Franklin – Professor of Computer Science at the University of Memphis, TN http://www.msci.memphis.edu/~franklin February 8, 2007 2:25 pm EST

[3]. Sam S. Adams - an IBM Distinguished Engineer within IBM's Research Division, Watson research Center (Hawthorne), and leader of IBM’s Joshua Blue Project, which applies ideas from complexity theory and evolutionary computational design to the simulation of mind on a computer. domino.research.ibm.com February 8, 2007 2:41 pm EST and http://www.csupomona.edu/~nalvarado/PDFs/AAAI.pdf February 8, 2007 2:43 pm EST

[4]. Eliezer S. Yudkowsky - an American self-proclaimed artificial intelligence researcher concerned with the Singularity, and an advocate of Friendly Artificial Intelligence. Wikipedia.org February 8, 2007 2:46 pm EST

[5]. Hugo de Garis - (born 1947, Sydney, Australia) became an associate professor of computer science at Utah State University. He is one of the more notable researchers in the sub-field of artificial intelligence known as evolvable hardware which involves evolving neural net circuits directly in hardware to build artificial brains. Wikipedia.org February 8, 2007 2:48 pm EST