Ethics for Machines

J. Storrs Hall, Ph.D.

Page 2 of 3

Image 6: Invariants

Here is one A Structural Invariant that if you have a machine that works well in terms of doing the updating function for its world model, it needs to learn. It needs to gain new knowledge and new understanding and create concepts that describe the world better than its old concepts did.

And this is a good thing to do. I mean, it's obviously good because the machine is going to be a better machine. Its predictor predicts better.

The more it knows, the better functioning it is. So if you put this kind of a goal, a love of knowledge, in the utility function, it refers to something, and will always be true of the machine.

| "The love of knowledge is always going to be good for the machine in terms of any of the goals that it has." |

Image 7: Structural Invariant

Socrates supposedly thought of this as being key to good and evil. I am sure it's not the only key to good and evil, but it's a good one.

Back to the evolutionary process. The evolutionary process actually puts a number of stamps on any organism that's an evolved design. In the case of engineered artifacts the simple situation is that when the machine works better, its design is more likely to be copied into the next generation of these machines. So we have steam engines and then we had more efficient steam engines and then we had gasoline engines and then we had jets and rockets and so forth.

Image 8: Evolving AI

The point is that anything that's an engineered artifact evolves in that sense, but in that sense the decisions, the selection function if you will, of the evolutionary process is done on purpose by people. Engineers look at the machine and say, well, it would work better if we did this, and therefore, we are going to copy this design in the next generation, but that didn't work and so we are going to leave it out. The major pressure on the evolutionary process by that kind of evolution is simply for it to work better.

On the other hand, when the AIs have a hand in creating new technology themselves – and a lot of the work I've done has been in automatic design and automatic programming -- once the AIs are actually part of that process, then an AI that has a motive to improve the chances of its own ideas in the next generation, as opposed to some human's notion of what the good ideas are, will have an evolutionary edge, just as all organisms appear to be self-interested because evolution makes them that way.

So there is going to be a watershed in the not-too-distant future where there is going to be a strong evolutionary force towards self-interest in machines.

Image 9: The Moral Ladder

Well, is that good or bad? I think first we have to point out that it's simply going to happen and so we may as well take account of it. But it turns out that a self-interested AI might want to be a moral agent for its own good. There is a nifty little part of game theory that started a couple of decades ago with Axelrod’s Evolution [1] of Cooperation Experiments, which you may be familiar with, where he took a bunch of little programs and ran tournaments between them and they played a simple game theory game called Prisoners Dilemma against each other. The ones that did better at the games were more strongly represented in the next generation and so forth, and so over time the population shifted towards the programs that were better game players.

Now, the interesting thing about the Prisoners Dilemma game is that the players in the game can either cooperate with each other or they can cheat on each other. It's set up so that in the immediate results of any one particular game, you're always better off cheating. No matter what the other person does, you're always better off cheating than cooperating. And yet, over evolutionary time, populations emerged from the set of original programs that cooperated.

This sort of counterintuitive result totally revolutionized game theory. It has this really nifty pattern to it, that you have a moral environment in the population that consists of the average sort of strategy of the individuals around you. It turns out that in many cases the program that emerges from a given population is just slightly nicer than that population. At any stage of the game you can have a strategy emerge that is a little better than the average strategy of the rest of the population. Now, the thing is that if you took the nicer strategies that come later in the series and you put them back in the Hobbesian [2] jungle populations of the earlier stages, they get eaten alive. As a stepladder you actually find this really interesting phenomenon in evolution, and I call this the Moral Ladder of Evolution.

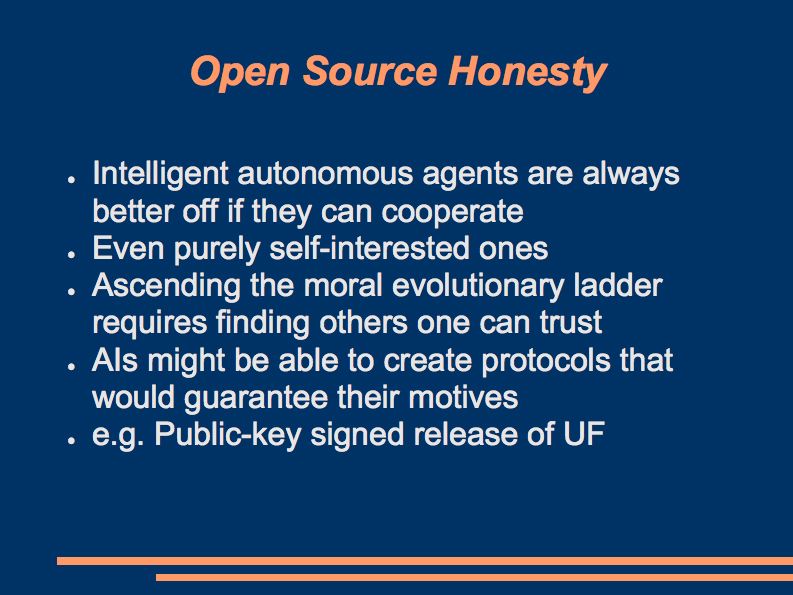

Image 10: Open Source Honesty

What would you want to do as far as an invariant in the design of an AI as far as this is concerned? Well, what you need to do is simply give the AI (it's smart enough to read and write, remember), give the AI an understanding of this particular principle, and it doesn't have to find it out for itself by running around and cheating and cooperating and getting its toe stubbed and so forth. It knows that, in any given population of a moral climate, its best chance to succeed and to flourish is to be just a little nicer than everybody else. In particular, the way way this works out is that a subpopulation of nicer strategies arises, finds each other, cooperates with each other, and that makes them do better than the rest of the population which is all still cheating on each other; so the AI would understand that pattern and look out for opportunities to enter into these mutual admiration societies.

So one of the neat things about an AI as opposed to people, and people do this too obviously, an AI, if it wanted to form one of these little mutual admiration societies with other AIs that were also cognizant of the moral ladder, could find algorithmic ways, based on codes and open source and so forth. The obvious one would be simply to release the source code to its utility function. It didn't even have to tell everybody what it knows, it just has to tell everybody how it feels about it. It could work out, and people do this kind of thing as well, so this is not anything new, but work out ways for the AIs to guarantee other AIs that they are trustworthy. Once they did that, they are well on their way to being a solid step up the ladder from what you find out in the world today, at least I think.

Footnotes

[1] Axelrod’s Evolution of Cooperation Experiments – a 1984 book and a 1981 article of the same title by political science professor Robert Axelrod. The nine-page article is currently one of the most cited articles ever to be published in the journal, Science. In it, Axelrod explores the conditions under which fundamentally selfish agents will spontaneously cooperate.http://en.wikipedia.org/wiki/The_Evolution_of_Cooperation

March 20, 2007 11:51PM EST

[2] Hobbesian - in modern English usage, refers to a situation in which there is unrestrained, selfish, and uncivilized competition among participants. The term is derived from the name of the 17th century English author Thomas Hobbes.

http://en.wikipedia.org/wiki/Hobbesian March 20, 2007 12:01PM EST

1 2 3 next page>